Gardels Awards

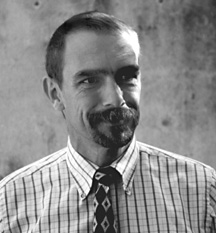

In Loving Memory of our friend and colleague, Kenneth D. Gardels – 1954-1999.

…think globally, act locally.

About the OGC Gardels Award

The Kenneth D. Gardels Award is a gold medallion presented each year by the OGC Board of Directors to an individual who has made exemplary contributions to OGC’s consensus standards process. Award nominations are made by members – the prior Gardels Award winners – and approved by the Board of Directors. The Gardels Award was conceived to memorialize the spirit of a man who dreamt passionately of making the world a better place through open communication and the use of information technology to improve the quality of human life.

Kenneth Gardels, a founding member and a director of OGC, coined the phrase “Open GIS.” Kenn died of cancer in 1999 at the age of 44. He was active in popularizing the open source Geographic Information System (GIS) ‘GRASS’, and was a key figure in the Internet community of people who used and developed that software. Kenn was well known in the field of GIS and was involved over the years in many programs related to GIS and the environment. He was a respected GIS consultant to the State of California and to local and federal agencies, and frequently attended GIS conferences around the world.

Kenn is remembered for his principles, courage, and humility, and for his accomplishments in promoting spatial technologies as tools for preserving the environment and serving human needs.