The pace of technological change is increasing as new products are made available, with ground-breaking innovation often resulting in disruptive change. Coupled with this technological change is the explosion of data from new sources relevant to the geospatial domain, including imagery, sensor feeds, and real-time tracking data. Organisations in the public, private, and third sectors must remain agile and resilient to thrive in this emerging landscape.

Adoption of new technological innovations into large, controlled IT enterprises can be challenging, because of associated costs, integration challenges, and processes. The Open Geospatial Consortium (OGC) is a standards body that, for the last 30 years, has been working to innovate and create community driven Standards in the geospatial domain. These Standards are designed to ensure data and services are Findable, Accessible, Interoperable, and Reusable (FAIR), whilst ensuring that the developed Standards are suited to the target domain and straightforward to adopt. FAIR principles can also be applied to the integration of new technologies into large enterprises, to ensure that interaction patterns with new technologies are known, and the components offering the technology are interchangeable.

Standardising AI/ML Training Data

An OGC Standard supporting a decidedly recent phenomenon is the OGC Training Data Markup Language for Artificial Intelligence. Artificial Intelligence (AI), Machine Learning (ML), and associated applications have been the subject of research since the 1990s and earlier. However, it is only recently that the necessary computational power and, importantly, the data to train AI models, have been available. Training data is the lifeblood of AI. As the old axiom goes, “garbage in, garbage out” and AI models are no different. Additionally, AI models and their inner workings can be opaque with the ‘black box’ effect on the inputs and outputs. Therefore, standardising the management of training data is important for scientific endeavours, not least for repeatability but also the FAIR principles.

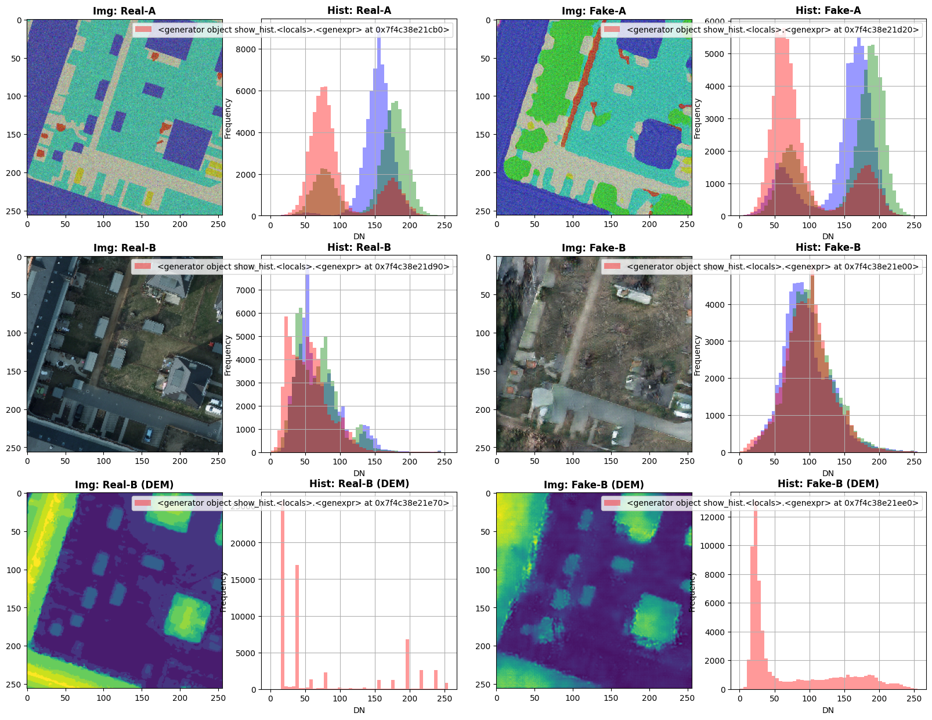

In addition to utilising AI to do typical geospatial and imagery work, we are also seeing AI used to create simulated data for training – or even nefarious purposes. Recording information about the input data to ML models in a standardised manner assists with the management and authenticity of outputs. By way of example, the image below is a completely fictional landscape created using some training data of a UK city and a Generational Adversarial Network (GAN). Standardising the training dataset metadata enables others to create their own simulated data with the same feel, whilst understanding the input parameters and likely outcomes.

Quantum Computing

While AI/ML is one current technology trend that offers a great example of how the technological landscape can change overnight, like with the 2022 release of ChatGPT, another disruptive technology set that has been on the horizon for the past few decades is Quantum Computing.

Although not likely to be a complete replacement for classical computing, quantum computing potentially offers an exponential speed-up to solving problems that are difficult for classical computing to manage. This is not necessarily because quantum computers are faster in the super computing sense, it is that their approach to solving problems is fundamentally different to classical computing. Classical computing operates by manipulating bits that are either in a 1 or a 0 position, chaining enough of these operations together enables computing machines to do useful work. Quantum computers are different in that their fundamental building block is the qubit that can exist in a superposition of both 1 and 0. There are also different types of quantum computing, circuit-based quantum computers are those that can do the headline-grabbing factoring of large numbers and therefore will be able to crack RSA encryption (which is built on the principle that factoring large numbers is hard). The second type of quantum computing is quantum annealing or adiabatic quantum computing that lends itself to solving optimisation problems.

The geospatial domain is full of optimisation problems, a typical example is the Travelling Salesperson Problem where a salesperson is required to visit several geographically dispersed locations with the constraint that they must visit each location once and only once while completing the route with the lowest cost (shortest distance, fastest drivetime). Another optimisation style problem is the Structural Imbalance Problem where an algorithm attempts to split a social network (often with a geospatial element) into friendly groups where, in a balanced graph, all relationships within the groups are friendly, while all the relationships between the groups are hostile. In the real world it is not usually possible to get a perfect result. This highlights relationships that do not fit the model (strained), which can be a predictor of conflict.

Practical quantum computing is now possible, there are several providers such as Rigetti, D-Wave, and the likes of Amazon, Google, and Microsoft who are starting to make their quantum computing resources available via the cloud. Although none of the quantum computers are sizable enough to provide a quantum advantage in the optimisation space, new quantum computers are planned that will be able to. As a stop gap, there are classical/quantum hybrid approaches that offer advantages of quantum computing but can solve practical problems.

As with many emerging technologies, the interaction patterns for these new quantum computers and hybrid solvers have not yet been standardised and are bespoke down to the hardware. The D-Wave system with the Ocean SDK for quantum computing has patterns and calls that cover many of the optimisation style calls that one could use a quantum solver for. Perhaps the next move for the OGC is to understand the impact of quantum computing through a Domain Working Group to support geospatial optimisation-style problems through a group dedicated to quantum computing and the opportunities it affords.

Where next?

Keeping a close eye on emerging technologies and getting ahead of the curve, in terms of adoption, enables Standards to be created that assist with integration and can provide meaningful change to organisations. The technology is the exciting piece and can offer untold opportunities, and the quicker and smoother these technologies can be adopted into the organisations that make use of them, the better the return for businesses, users, and citizens. Good, domain-driven Standards-definition is key to a FAIRer technology landscape.

A quantum computing ad-hoc session will be held at 15:30 CET on March 27 , 2024, as part of the 128th OGC Member Meeting in Delft, Netherlands, to discuss the possibility of Standardisation in the Quantum Computing domain.

The overall theme of the meeting is “Geo-BIM for the Built Environment” and will additionally include a Geo-BIM Summit, a Land Admin Special Session, an Observational Data Special Session, a Built Environment Joint Session, a meeting of the Europe Forum, as well as the usual SWG & DWG meetings and social & networking events. Learn more and register here.

Sam Meek is the Chief Technology Officer at Helyx Secure Information Systems Ltd.